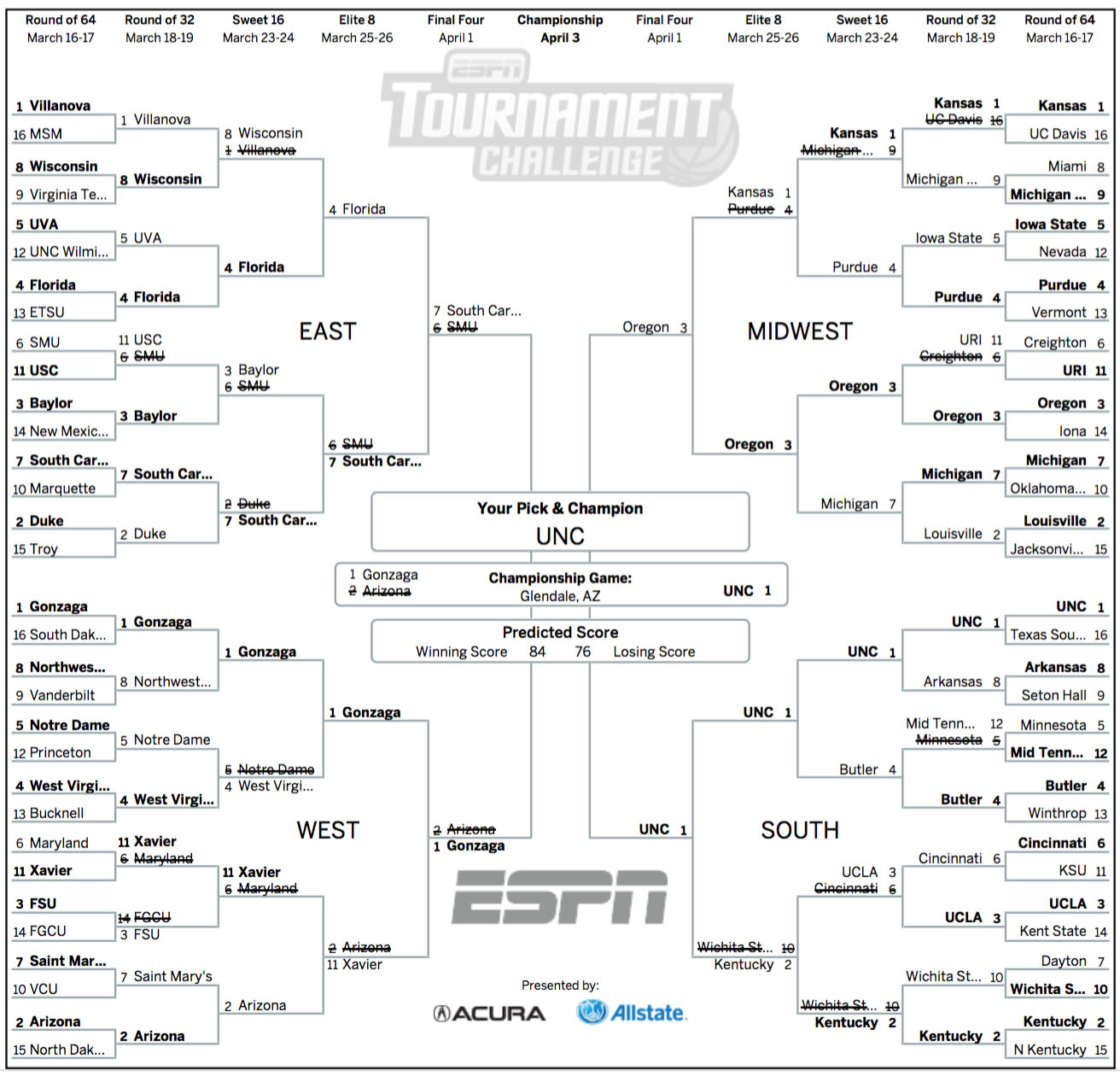

For this year’s March Madness tournament, I built an algorithmic bracket picker based on Dungeons and Dragons dice-roll battles. The bracket it generated, that I submitted for my company’s bracket competition, did surprisingly well: it correctly picked UNC to win the tournament and placed first overall in our bracket competition.

How accurate was it?

To examine the generated bracket’s accuracy, I loaded its predicted games and the

tournament’s actual games into SQL tables, actual_games and predicted_games:

CREATE TABLE actual_games (

round INT,

division VARCHAR(7),

game_number INT,

team_a VARCHAR(255),

team_b VARCHAR(255),

winner VARCHAR(255),

PRIMARY KEY(round, division, game_number)

);

To see how many games whose winners were accurately predicted by D&D, compare the winners:

SELECT picked_correctly, COUNT(*)

FROM (

SELECT

actual.round,

actual.division,

actual.game_number,

actual.winner=predicted.winner as picked_correctly

FROM actual_games as actual

INNER JOIN predicted_games as predicted

ON actual.round = predicted.round AND

actual.division = predicted.division AND

actual.game_number = predicted.game_number

) games GROUP BY 1;

Games Predicted Accurately

As the tournament goes on, previous mistakes make it increasingly harder to make correct picks. I broke the accuracy down by round number to see how it performed as the picks got harder.

SELECT round, COUNT(*), SUM(picked_correctly), SUM(picked_correctly)/COUNT(*) as percentage_correct FROM (

SELECT

actual.round,

actual.winner=predicted.winner as picked_correctly

FROM actual_games as actual

INNER JOIN predicted_games as predicted

ON actual.round = predicted.round AND

actual.division = predicted.division AND

actual.game_number = predicted.game_number

) x

GROUP BY 1

ORDER BY 1 DESC;

Games Predicted Accurately by Round

At least 50% accuracy throughout the tournament, not bad. The 100% in the championship round is misleading since there’s obviously only one game in that round.

The randomness/upset factor

It would be easy to just rank teams on seed number and come up with a fairly accurate bracket. The real magic of the tournament is in the Cinderella stories and huge unexpected upsets. The D&D algorithm accounts for these by introducing random factors like dragon attacks, which immediately end the battle and award a random team the victory. I wanted to see how well my random upset picks performed:

SELECT picked_correctly, COUNT(*)

FROM (

SELECT

actual_v.round,

actual_v.division,

actual_v.game_number,

actual_v.winner=predicted_v.winner as picked_correctly

FROM actual_v

INNER JOIN predicted_v

ON actual_v.round = predicted_v.round AND

actual_v.division = predicted_v.division AND

actual_v.game_number = predicted_v.game_number

WHERE (

(predicted_v.team_a_seed < predicted_v.team_b_seed and predicted_v.winner = predicted_v.team_b) OR

(predicted_v.team_b_seed < predicted_v.team_a_seed and predicted_v.winner = predicted_v.team_a)

)) x

GROUP BY 1;

Upsets Predicted Accurately

The bracket went 3-11 predicting upsets where a higher seeded team beat a lower seed. Not a great showing, but those games are really hard to predict using just the data the algorithm uses.

Next Year

For next year’s bracket, I will try to incorporate more metrics into the model to make battle logic smarter. This year’s iteration was only based on seed value and points scored and allowed. I’ll also take a few dragons out to cut down on false upsets.